Create Redshift Tables

Create custom-named database in Redshift

Important Notes:

- Redshift Serverless is preconfigured with the default database

dev. If you are using it as the Firehose destination database, this setup step is not required. Please ignore.

Steps to Create Database

- Go to Redshift Serverless Console

- Click on your workgroup

- Go to the Query Editor

- On the left side click on your Serverless workgroup you created earlier

- Select “Database user name and password”

- Leave the database name as “dev” which is the default from earlier.

- Enter the previously created username and password

- Click Create Connection

- Click on Create and then Database

- Enter the database name and choose your user or group from the dropdown.

- Click on Create database

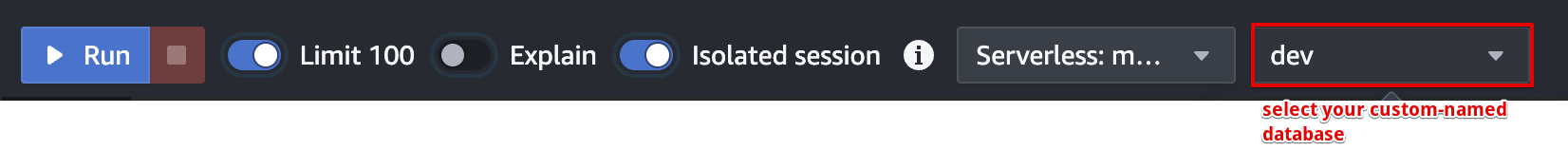

After creating your own custom-named database it is now time to create the necessary tables. For all following SQL queried make sure you are operating in your new custom-named database and not in the default one (dev). To do so, ensure your database is selected next to the run button of the redshift Query editor.

Create Staging Table in Redshift

-

Important Notes:

- Firehose can only send data to a single Redshift table.

- The provided table creation commands are based on the default, non-customized playbook.

- If you have customized the example playbook, you must modify the table structure to match your implementation.

- Non-staging tables should be configured based on your specific requirements, as their setup is entirely under your control.

- The provided schema is an example used for testing and should be adjusted as needed for production use.

-

Steps to Create the Staging Table

- Open the Amazon Redshift Serverless console.

- Click on your workgroup.

- Open the Query Editor (click on “Query Data”).

- Run a SQL query to create a staging table (for users + events). The following query is an example and must be modified to match your use case:

-

CREATE TABLE staging_firehose ( anonymousId VARCHAR(MAX), context VARCHAR(MAX), integrations VARCHAR(MAX), messageId VARCHAR(MAX), originalTimestamp VARCHAR(MAX), properties VARCHAR(MAX), receivedAt VARCHAR(MAX), sentAt VARCHAR(MAX), timestamp VARCHAR(MAX), traits VARCHAR(MAX), type VARCHAR(MAX), event VARCHAR(MAX), userId VARCHAR(MAX), writeKey VARCHAR(MAX) ); - Stay in the Query Editor for next steps.

Create the Events Table in Redshift

-

Open the Query Editor in Redshift.

-

Run a SQL query to create an events table. The following query is an example and must be modified to match your use case:

CREATE TABLE events ( anonymousId VARCHAR(MAX), context VARCHAR(MAX), integrations VARCHAR(MAX), messageId VARCHAR(MAX), originalTimestamp VARCHAR(MAX), properties VARCHAR(MAX), receivedAt VARCHAR(MAX), sentAt VARCHAR(MAX), timestamp VARCHAR(MAX), type VARCHAR(MAX), event VARCHAR(MAX), userId VARCHAR(MAX), writeKey VARCHAR(MAX) ); -

Stay in the Query Editor for next steps.

Create the Users Table in Redshift

-

Open the Query Editor in Redshift.

-

Run a SQL query to create a users table. The following query is an example and must be modified to match your use case:

CREATE TABLE users ( anonymousId VARCHAR(MAX), context VARCHAR(MAX), integrations VARCHAR(MAX), messageId VARCHAR(MAX), originalTimestamp VARCHAR(MAX), receivedAt VARCHAR(MAX), sentAt VARCHAR(MAX), timestamp VARCHAR(MAX), traits VARCHAR(MAX), type VARCHAR(MAX), event VARCHAR(MAX), userId VARCHAR(MAX), writeKey VARCHAR(MAX) ); -

Stay in the Query Editor for next steps.

-

Grant Table Read/Write Access

-

To enable the Scheduled Query feature in Redshift (which will automatically insert data from the staging table into the final tables), you must grant the Redshift namespace/cluster role’s user in database access to the newly created tables.

-

To create this user one can follow one of the two routes. One being either jumping to Scheduled Query section and running a query to fail once which will automatically create user for it. Second one being creating user for the role manually. To manually create the user run modified version this query:

// Make sure that <ROLE_NAME> is 1 to 1 with the role associated with our Redshift. CREATE USER IAMR:<ROLE_NAME> PASSWORD '<PASSWORD>'; // e.g. CREATE USER IAMR:firehosedocumentationredshiftrole PASSWORD 'Admin123'a. Confirm the user exists after following one of the routes to create the user.

SELECT * FROM pg_user; // Output must include IAMR:<ROLE_NAME> -

Run the following SQL query, replacing

<ROLE_NAME>with the IAM role you configured earlier, and make sure that table names matches your table names. The ones below (events, staging_firehose and users) are our example tables. Please ensure it executes successfully before proceeding.GRANT INSERT, SELECT ON TABLE events TO IAMR:<ROLE_NAME>; // e.g. "IAMR:firehosedocumentationredshiftrole" GRANT INSERT, SELECT ON TABLE staging_firehose TO IAMR:<ROLE_NAME>; GRANT INSERT, SELECT ON TABLE users TO IAMR:<ROLE_NAME>; GRANT DELETE ON TABLE staging_firehose TO IAMR:<ROLE_NAME>; -

Stay in the Query Editor for next steps.

-

Set up a Scheduled Query

-

Paste one of the following queries into the Query Editor in Redshift.

- The first query is a basic example meant for initial testing.

- The second query is a more advanced version that ensures users' information is updated dynamically.

- Important: These are examples and should be customized to meet your actual data requirements.

-- This is pure example and won't satisfy real use cases after your testing please update this code. A more realistic use case version can be found in the second script. -- Query 1 -- Insert data into table1 INSERT INTO events (anonymousId, context, integrations, messageId, originalTimestamp, receivedAt, sentAt, "timestamp", type, event, userId, writeKey) SELECT anonymousId, context, integrations, messageId, originalTimestamp, receivedAt, sentAt, "timestamp", type, event, userId, writeKey FROM firehose_staging; -- Insert data into table2 INSERT INTO users (anonymousId, userId) SELECT anonymousId, userId FROM firehose_staging; -- (Optional) Delete processed data from staging table DELETE FROM firehose_staging WHERE receivedAt <= SYSDATE; -- More intricate version of the script where user table will get updated whenever more information comes through for a specific anonymousID and for new users new records will be created but unlike previous script anonymousID will be unique. -- Query 2 -- Insert new events into the events table INSERT INTO events (anonymousId, context, integrations, messageId, originalTimestamp, receivedAt, sentAt, "timestamp", type, event, userId, writeKey) SELECT anonymousId, context, integrations, messageId, originalTimestamp, receivedAt, sentAt, "timestamp", type, event, userId, writeKey FROM firehose_staging; -- Step 1: Update existing users with missing information UPDATE users SET userId = COALESCE(users.userId, staging.userId), name = COALESCE(users.name, staging.name), age = COALESCE(users.age, staging.age) FROM firehose_staging AS staging WHERE users.anonymousId = staging.anonymousId; -- Step 2: Insert new users that do not already exist in the users table INSERT INTO users (anonymousId, userId, name, age) SELECT staging.anonymousId, staging.userId, staging.name, staging.age FROM firehose_staging AS staging LEFT JOIN users ON users.anonymousId = staging.anonymousId WHERE users.anonymousId IS NULL; -- (Optional) Delete processed data from the staging table DELETE FROM firehose_staging WHERE receivedAt <= SYSDATE; -

Click the Schedule button in the top-right corner to configure the query to run periodically. This ensures data from the staging table is continuously moved to the appropriate tables.

-

Select the IAM role that has access to the Redshift workgroup.

-

Select the workgroup you created earlier.

-

Enter a name and description for the scheduled query.

-

Configure any additional settings based on your requirements.

-

Click Schedule Query.

Optional Steps to Check Status of Scheduled Queries

If “You don’t have permission to view the scheduled query history.” error pops up when trying to view the status of Scheduled Query’s status amazon account trying to view the history needs to be added to trusted accounts in the role’s policy settings.

- To achieve this append this section to the trusted policy of the associated role to Redshift namespace/cluster.

{

"Sid": "AssumeRole",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:sts::00000000000:assumed-role/AWSXXXXXXXX_MRIntegrationsEngineers_000000000/[email protected]"

]

},

"Action": "sts:AssumeRole"

}- After appending this section whole policy should look like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": [

"firehose.amazonaws.com",

"events.amazonaws.com",

"s3.amazonaws.com",

"redshift.amazonaws.com"

]

},

"Action": "sts:AssumeRole"

},

{

"Sid": "AssumeRole",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:sts::00000000000:assumed-role/AWSXXXXXXXX_MRIntegrationsEngineers_000000000/[email protected]"

]

},

"Action": "sts:AssumeRole"

}

]

}Updated 5 months ago

If you are using Redshift Serverless, please go to: Redshift Serverless - Create a Firehose Stream If using Provisioned Cluster, please go to: Redshift Provisioned Cluster - Create a Firehose Stream