Firehose MetaRouter Setup

MetaRouter Setup

After completing the Firehose and Redshift setup, you can configure the Amazon Data Firehose integration in MetaRouter. Follow the steps below to complete the setup process.

Requirements

Before proceeding, ensure you have the following:

- AWS Access Key and Secret Key

- These should have been recorded during the "Get Access Key" stage.

- If you did not save them or have lost them, generate a new key by following the steps here (link to section).

- Firehose Stream Name

- This should have been recorded during the "Create Firehose Stream" stage.

- If not, you can find it in the Firehose Streams list on the Amazon Kinesis Data Firehose page.

- Region

- Located in the top-right corner of the AWS Management Console.

- This is the region where your Firehose stream was created, i.e.

eu-north-1.

Adding an Amazon Data Firehose Integration

To integrate Firehose with MetaRouter:

- Open the Integration Library in MetaRouter.

- Add an Amazon Data Firehose integration.

- Fill out the Connection Parameters as follows:

| Connection Parameter | Description |

|---|---|

ACCESS_KEY | AWS access key used for authentication. |

REGION | AWS region where the Firehose stream is deployed. |

SECRET_KEY | AWS secret key used for secure API access. |

STREAM_NAME | Name of the Firehose stream receiving event data. |

- Customize the Playbook (if needed) to match your Redshift table schema. The playbook must be compatible with your Redshift tables.

- Deploy the pipeline with the integration.

- Use the generated AJS file from the pipeline.

- Test the integration to verify that events are properly inserted into Redshift tables.

How to Test Your Integration

Note: Data ingestion may take time depending on your Firehose buffer settings.

-

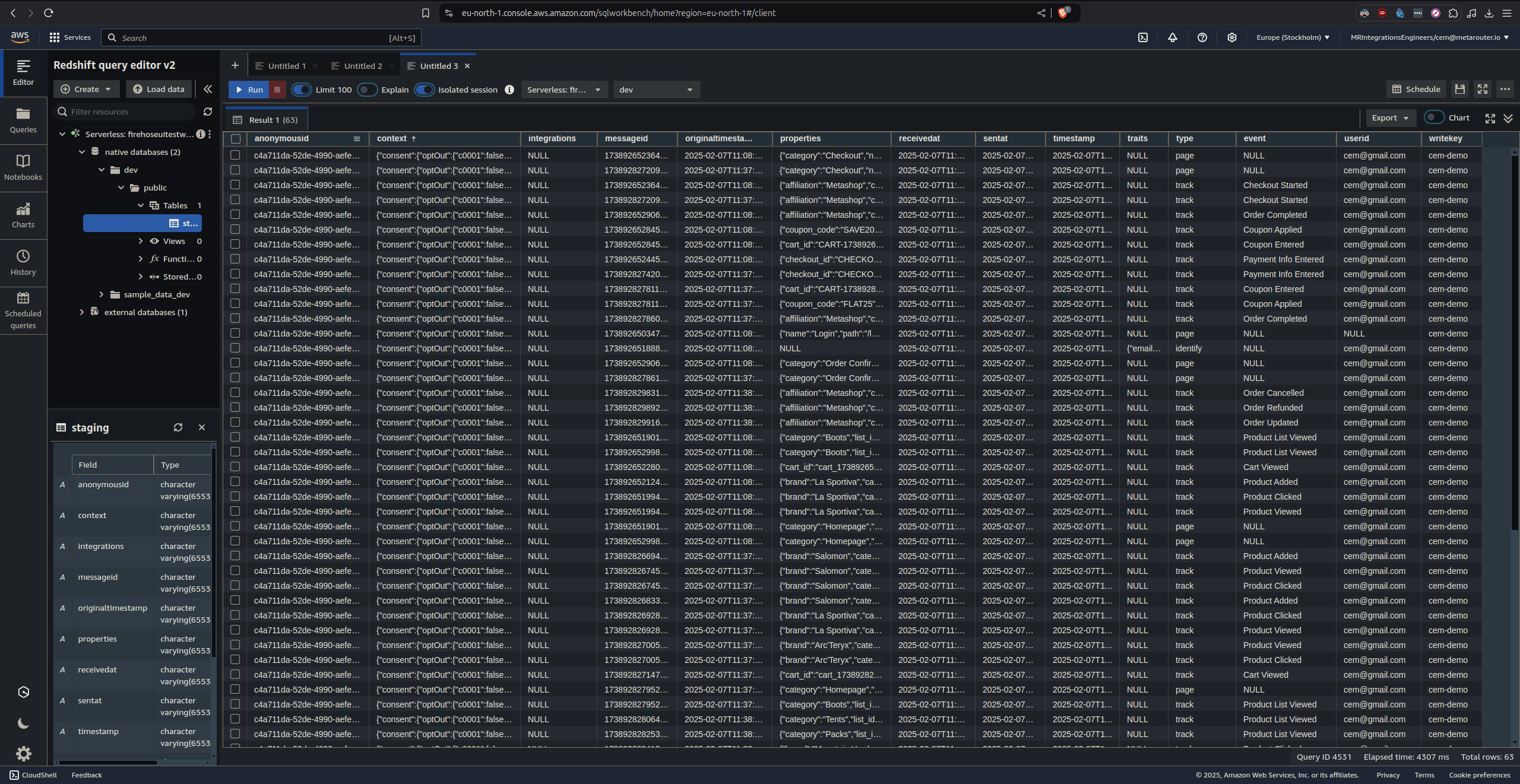

Open the Redshift Query Editor.

-

Run the following query to check if data has been received in the staging table:

SELECT * FROM firehose_staging; -- Check staging table -

If data is missing from the staging table, check your configured tables, as the Scheduled Query may have already moved the data. You can verify it using:

SELECT * FROM events; -- Example of a table populated by the Scheduled Query -

If the query is successful, you should see incoming event data similar to the expected format.

Updated 5 months ago