Google BigQuery

What Is Google BigQuery?

Google BigQuery is a fully-managed, server-less data warehouse that enables scalable and fast SQL queries using the processing power of Google's infrastructure. It is designed to handle large datasets, allowing users to analyze terabytes of data in seconds and petabytes in minutes.

MetaRouter provides an efficient Extract, Transform and Load (ETL) platform that streams structured customer data into BigQuery in real-time. Ensure that data is always structured properly with MetaRouter' parameter-by-parameter data mapping and transformation playbooks.

What are the benefits of integrating MetaRouter with Google BigQuery?

- Private data stream that can never be co-mingled with anyone else's data.

- In-stream transformations providing highly structured data to the warehouse, improving data availability and reducing costly errors.

- Data streamed into BigQuery near-real-time - no batching required.

- Addressable third-party IDs are provided within your warehouse when paired with the Sync Injector.

Important Notes and Limitations

-

Workload Identity:

- If your MetaRouter deployment runs on Google Kubernetes Engine (GKE), you can authenticate with BigQuery using Workload Identity instead of a JSON service account key. Workload Identity allows your MetaRouter pods to authenticate directly with Google Cloud APIs without managing static credentials, providing a more secure and maintenance-free authentication method.

- The flow for Workload Identity is the recommended path.

- Workload Identity is supported only for clusters running in Google Cloud (GKE). Deployments hosted outside of GCP must use the Service Account JSON key authentication method.

-

Event Name and Table Matching:

-

The event name specified in MetaRouter will match the table name inside of BigQuery. Changing the name of the event will create a new table.

- If you select

falseforBIG_QUERY_OPTIONSunder the connection parameters, then you do not have to givebigquery.datasets.createpermission in the IAM role--the integration will not create a new dataset, and no events will be delivered if the event does not exist.

- If you select

-

We cannot route events to custom names tables.

-

-

Event Table Schema Consistency:

- Once an event table is created in BigQuery, its schema must remain consistent. MetaRouter does not programmatically delete or alter the structure of existing event tables to prevent data inconsistencies and ensure data integrity. This means any changes to the event structure should be carefully planned and manually handled.

- MetaRouter cannot alter or delete existing columns, but we can add new columns based on new parameters being included in an event payload and mapped to the destination. This allows for extending the data model as new requirements emerge, without disrupting the existing data flow. However, ensure that new columns follow the established schema and data type conventions to maintain consistency.

-

Data Type Consistency:

- It is crucial to maintain data type consistency for each field across all events. For instance, if a field is initially set as a string, all subsequent events must adhere to this data type. Inconsistent data types can cause ingestion errors and complicate data analysis. Establishing and following a strict schema ensures smooth data processing and accurate analysis.

-

Table Overrides Behavior:

- Events with a custom table name defined in

TABLE_OVERRIDESwill be routed to their specified tables. - If

DEFAULT_TABLE_OVERRIDEis not provided, any event not listed in TABLE_OVERRIDESwill be stored in a new table called events. - If

DEFAULT_TABLE_OVERRIDEis provided, events not defined inTABLE_OVERRIDESwill be sent to the specified default table. - Both

TABLE_OVERRIDESandDEFAULT_TABLE_OVERRIDEfields are optional. If neither is provided, each event will be stored in a BigQuery table named after the event (default behavior). - Example:

-

DEFAULT_TABLE_OVERRIDE: "catch_all_events" TABLE_OVERRIDES: identify: identities page: page_view products_searched: search

-

- Events with a custom table name defined in

Getting Started - From Your Google BigQuery Account

Step 1: Gather Project ID

- Navigate to the Google Cloud Console:

- Go to the Google Cloud Console.

- Select Your Project:

- Click on the project drop-down menu at the top of the page. If you have multiple projects, you can select the appropriate one from this list. If you don’t have a project, you can create a new one by clicking on "New Project".

- Locate the Project ID:

- Once you have selected your project, the Project ID will be displayed on the project dashboard. It is typically in the format of

my-project-id.

- Once you have selected your project, the Project ID will be displayed on the project dashboard. It is typically in the format of

Step 2: Choose Your Authentication Method

Path A — Workload Identity Flow

- Set up a service account and gather credentials:

- If you are using the Workload Identity flow, please reach out to your MetaRouter representative. We will generate a service account email for you.

- Grant Permissions:

- Assign the necessary roles to your service account (if using the Workload Identity flow, please assign roles to the service account email provided by the MetaRouter team). You will need to grant at least the "BigQuery Data Editor" roles. Please see more information on user roles below:

- BigQuery IAM Roles and Permissions - If you select

BIG_QUERY_OPTIONSasfalsein the connection parameters, then you do not have to give permission to create a dataset. - Control access to resources with IAM

- BigQuery IAM Roles and Permissions - If you select

- Authentication with Workload Identity

- Leave the

CREDENTIALSfield empty in your MetaRouter BigQuery integration configuration. When no credentials are provided, the integration automatically falls back to GCP default credentials, which Workload Identity supplies.

- Leave the

- Assign the necessary roles to your service account (if using the Workload Identity flow, please assign roles to the service account email provided by the MetaRouter team). You will need to grant at least the "BigQuery Data Editor" roles. Please see more information on user roles below:

Path B — Service Account Key Flow

- Create a Service Account:

- Navigate to the Google Cloud Console.

- Go to "IAM & Admin" section and select "Service Accounts".

- Click "Create Service Account", enter a name, and provide a description.

- Please be sure to associate this account with the project associated with the project ID you will use for this integration.

- Grant Permissions:

- Assign the necessary roles to your service account (if using the Workload Identity flow, please assign roles to the service account email provided by the MetaRouter team). You will need to grant at least the "BigQuery Data Editor" roles. Please see more information on user roles below:

- BigQuery IAM Roles and Permissions - If you select

BIG_QUERY_OPTIONSasfalsein the connection parameters, then you do not have to give permission to create a dataset. - Control access to resources with IAM

- BigQuery IAM Roles and Permissions - If you select

- Assign the necessary roles to your service account (if using the Workload Identity flow, please assign roles to the service account email provided by the MetaRouter team). You will need to grant at least the "BigQuery Data Editor" roles. Please see more information on user roles below:

- Create and Download Key File:

- After creating the service account, go to the "Keys" section and add a key.

- Select "Create new key", choose "JSON", and download the key file. This JSON file contains the credentials needed to authenticate your application with BigQuery.

- Use the Key File as

CREDENTIALS:- Upload the key file to your MetaRouter integration configuration as part of the setup process for BigQuery.

Getting Started - From Your MetaRouter Account

Adding a Google BigQuery integration.

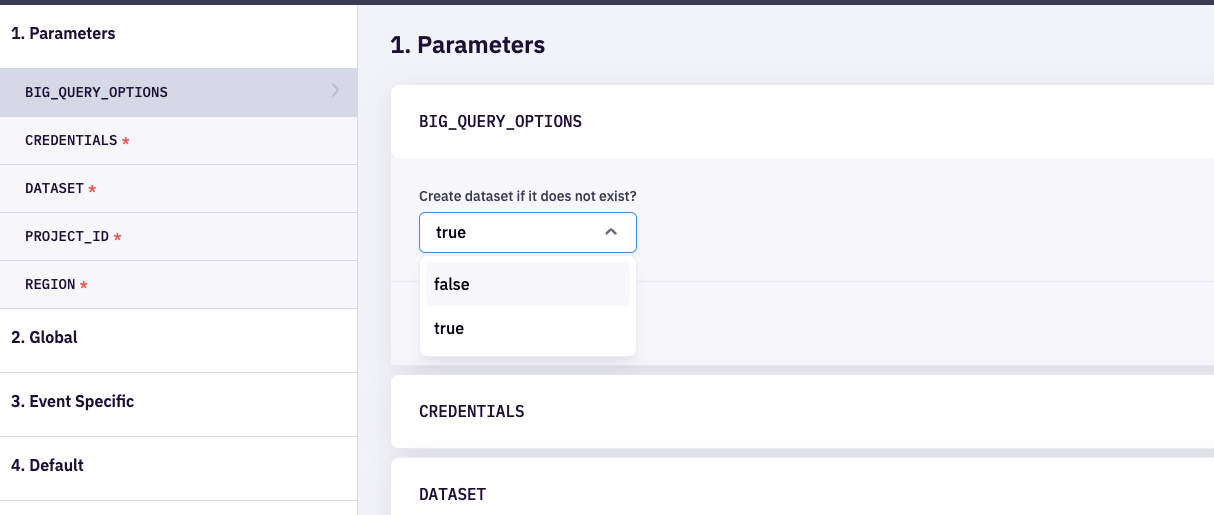

From the integration library, add a Google BigQuery integration. Then, fill out the Connection Parameters:

| Connection Parameter | Description |

|---|---|

BIG_QUERY_OPTIONS | Select true if you would like the integration to create a dataset if it does not exist for an event. If you select false, then you do not have to give bigquery.datasets.create permission in the IAM role--the integration will not create a new dataset, and no events will be delivered if the event does not exist. |

CREDENTIALS | JSON key from file downloaded from Google Cloud Console. |

DATASET | Name of dataset. If inputting a name of a dataset that doesn’t exist, then the integration will create a new dataset with the given name. Please follow dataset naming best practices as outlined https://cloud.google.com/bigquery/docs/datasets#dataset-naming. |

PROJECT_ID | Project ID gathered from Google Cloud Console. |

REGION | Location specified for your BigQuery dataset. ENUM accepting string values, please see https://cloud.google.com/bigquery/docs/locations#regions. |

DEFAULT_TABLE_OVERRIDE | Specifies a default table name for events not explicitly mapped. Optional, please see Important Notes section. |

TABLE_OVERRIDES | A key-value object where the key represents an event name, and the value specifies the corresponding BigQuery table name. Optional, please see Important Notes section. |

Event Mappings

Due to the complexity of event mapping in BigQuery, it is highly recommended to reach out to the MetaRouter team and collaborate with a solutions engineer. While MetaRouter includes recommended parameters under Global mapping, you will need to accurately map events when initially building out the integration. This collaboration ensures precise and efficient configuration tailored to your specific needs.

Global

Global mappings will be applied to all events. If your parameter names do not match the Expected Inputs provided, you will need to overwrite the Inputs provided with your own.

Ensuring event and data consistency is crucial when using BigQuery with MetaRouter. Please see Notes and Limitations above.

| Output Key | Description | Expected Input |

|---|---|---|

id | String: A unique identifier for the message. This field ensures each event can be uniquely identified and tracked. | messageId |

user_id | String: A unique identifier for the user. | userId |

previous_id | String: The identifier for the previous event. | previousId |

anonymous_id | String: An anonymous identifier for the user. | anonymousId |

original_timestamp | Timestamp: The original timestamp of the event. | originalTimestamp |

received_at | Timestamp: The timestamp when the event was received. | receivedAt |

sent_at | Timestamp: The timestamp when the event was sent. | sentAt |

timestamp | Timestamp: The timestamp of the event. | timestamp |

integrations | String: Integration-specific options or overrides. | integrations |

type | String: The type of event (e.g., identify, track). | type |

event | String: The name of the event. | event |

name | String: The name associated with the event. | name |

category | String: The category associated with the event. | category |

| — | The context object in MetaRouter provides useful information about the environment in which an event occurred. This can include details about the page, device, browser, location, and more. | context |

| — | Traits are attributes that describe a user or a group. When you call the identify method, you can pass in a traits object that includes key-value pairs of user information such as name, email, phone number, etc. | traits |

| — | Properties are specific to events and provide additional context about the event itself. For instance, in a purchase event, properties might include details like the order value, product ID, quantity, and payment method. These are different from traits because they are transient and event-specific rather than being persistent attributes of a user. | properties |

Additional Google BigQuery Documentation

Google BigQuery - Data Types

Updated 5 days ago